Month: October 2024

UAV Toolbox Support for PX4 Autopilots – HIL_SENSOR MAVLink message data not accepted by stock PX4 flight controller

Hi everyone,

I am experiencing a strange issue creating a Simulink quadrotor simulation environment for testing the stock PX4 flight controller. I am adapting the model from this Simulink PX4 example: Monitor and Tune PX4 Host Target Flight Controller with Simulink-Based Plant Model. However, instead of running the flight controller on PX4 by creating a custom Simulink controller, I want to use the stock PX4 flight controller.

At first I tried running PX4 using just "make px4_sitl none_iris" in a fresh PX4 installation, and then running the Simulink model. This kind of works – PX4 waits for connection from a simulator, and then connects when the plant model is run. However, it immediately experiences 14 pre-flight failures, which means it does not arm. It does not display all of these errors, but they seem to be related to the sensors + others not listed.

I found some errors in the original simulink-based plant model (the heartbeat signal is broadcasting that it is a submarine? But also does not seem to be necessary in the first place) as well as some incorrect units being published to MAVLink. Fixing these does not seem to help.

I found a python file that creates a dummy drone that is convincing enough for PX4 to connect to. The code is attached to this post in the dummy sim file. Running the above make command and then the sim convinces PX4 that everything is good to go and the commander arms. I created a new Simulink file that just populates HIL_GPS, HIL_SENSOR and HIL_STATE_QUATERNION with the same data from the python file (base values, random offsets, scale, etc.).

Running PX4 and connecting to this sim clears 9 of the 14 pre-flight failures. The remaining 5 are all connected to the sensor message – the flight controller claims it is receiving invalid or no data from the accelerometer, gyroscope, compass and barometer. An image of the error is attached below.

All of this information should be provided by the HIL_SENSOR message, which is fully populated in the Simulink file and sent over UDP/TCP the same way all the other MAVLink messages are being sent. Why the HIL_SENSOR data is specifically not working is beyond me. Same values in the python script seem to be fine. Also, every sensor is failing, so I doubt I have messed up one or two inputs in the Simulink bus assignment. Attahced below is an image of the HIL_SENSOR assignment. I have also attached the Simulink file (called MinimumViableModel) as well as necessary support scripts.

Any help would be appreciated! I hope I have attached everything necessary to reproduce the issue.Hi everyone,

I am experiencing a strange issue creating a Simulink quadrotor simulation environment for testing the stock PX4 flight controller. I am adapting the model from this Simulink PX4 example: Monitor and Tune PX4 Host Target Flight Controller with Simulink-Based Plant Model. However, instead of running the flight controller on PX4 by creating a custom Simulink controller, I want to use the stock PX4 flight controller.

At first I tried running PX4 using just "make px4_sitl none_iris" in a fresh PX4 installation, and then running the Simulink model. This kind of works – PX4 waits for connection from a simulator, and then connects when the plant model is run. However, it immediately experiences 14 pre-flight failures, which means it does not arm. It does not display all of these errors, but they seem to be related to the sensors + others not listed.

I found some errors in the original simulink-based plant model (the heartbeat signal is broadcasting that it is a submarine? But also does not seem to be necessary in the first place) as well as some incorrect units being published to MAVLink. Fixing these does not seem to help.

I found a python file that creates a dummy drone that is convincing enough for PX4 to connect to. The code is attached to this post in the dummy sim file. Running the above make command and then the sim convinces PX4 that everything is good to go and the commander arms. I created a new Simulink file that just populates HIL_GPS, HIL_SENSOR and HIL_STATE_QUATERNION with the same data from the python file (base values, random offsets, scale, etc.).

Running PX4 and connecting to this sim clears 9 of the 14 pre-flight failures. The remaining 5 are all connected to the sensor message – the flight controller claims it is receiving invalid or no data from the accelerometer, gyroscope, compass and barometer. An image of the error is attached below.

All of this information should be provided by the HIL_SENSOR message, which is fully populated in the Simulink file and sent over UDP/TCP the same way all the other MAVLink messages are being sent. Why the HIL_SENSOR data is specifically not working is beyond me. Same values in the python script seem to be fine. Also, every sensor is failing, so I doubt I have messed up one or two inputs in the Simulink bus assignment. Attahced below is an image of the HIL_SENSOR assignment. I have also attached the Simulink file (called MinimumViableModel) as well as necessary support scripts.

Any help would be appreciated! I hope I have attached everything necessary to reproduce the issue. Hi everyone,

I am experiencing a strange issue creating a Simulink quadrotor simulation environment for testing the stock PX4 flight controller. I am adapting the model from this Simulink PX4 example: Monitor and Tune PX4 Host Target Flight Controller with Simulink-Based Plant Model. However, instead of running the flight controller on PX4 by creating a custom Simulink controller, I want to use the stock PX4 flight controller.

At first I tried running PX4 using just "make px4_sitl none_iris" in a fresh PX4 installation, and then running the Simulink model. This kind of works – PX4 waits for connection from a simulator, and then connects when the plant model is run. However, it immediately experiences 14 pre-flight failures, which means it does not arm. It does not display all of these errors, but they seem to be related to the sensors + others not listed.

I found some errors in the original simulink-based plant model (the heartbeat signal is broadcasting that it is a submarine? But also does not seem to be necessary in the first place) as well as some incorrect units being published to MAVLink. Fixing these does not seem to help.

I found a python file that creates a dummy drone that is convincing enough for PX4 to connect to. The code is attached to this post in the dummy sim file. Running the above make command and then the sim convinces PX4 that everything is good to go and the commander arms. I created a new Simulink file that just populates HIL_GPS, HIL_SENSOR and HIL_STATE_QUATERNION with the same data from the python file (base values, random offsets, scale, etc.).

Running PX4 and connecting to this sim clears 9 of the 14 pre-flight failures. The remaining 5 are all connected to the sensor message – the flight controller claims it is receiving invalid or no data from the accelerometer, gyroscope, compass and barometer. An image of the error is attached below.

All of this information should be provided by the HIL_SENSOR message, which is fully populated in the Simulink file and sent over UDP/TCP the same way all the other MAVLink messages are being sent. Why the HIL_SENSOR data is specifically not working is beyond me. Same values in the python script seem to be fine. Also, every sensor is failing, so I doubt I have messed up one or two inputs in the Simulink bus assignment. Attahced below is an image of the HIL_SENSOR assignment. I have also attached the Simulink file (called MinimumViableModel) as well as necessary support scripts.

Any help would be appreciated! I hope I have attached everything necessary to reproduce the issue. simulink, sitl, software in the loop, px4, sensor data MATLAB Answers — New Questions

How to Automatically Upload AEwin .DTA Files to a Web Server Using MATLAB or Python?

Hello everyone,

I am working on automating the upload of Acoustic Emission data collected via AEwin, which stores data in .DTA format. My goal is to:

Automatically process the .DTA files generated by AEwin.

Extract key data from these .DTA files.

Upload this data to a web server (such as ThingSpeak or a custom server) in real-time.

What I Have Tried:

Python: I’ve tried reading the .DTA file using the MistrasDTA Python library, and encountered the error.

MATLAB: While I haven’t tried MATLAB yet, I am wondering if it could be a better alternative for extracting data from .DTA files and uploading it to a server.

My Questions:

Has anyone successfully processed AEwin .DTA files in MATLAB? If so, what functions or toolboxes are recommended for this task?

Is there a way to automate this process fully (i.e., automatically upload the data to a web server whenever a new .DTA file is created)?

Any suggestions or examples of how to integrate this workflow between AEwin and a server would be greatly appreciated.

Thank you in advance for any help or suggestions you can offer!Hello everyone,

I am working on automating the upload of Acoustic Emission data collected via AEwin, which stores data in .DTA format. My goal is to:

Automatically process the .DTA files generated by AEwin.

Extract key data from these .DTA files.

Upload this data to a web server (such as ThingSpeak or a custom server) in real-time.

What I Have Tried:

Python: I’ve tried reading the .DTA file using the MistrasDTA Python library, and encountered the error.

MATLAB: While I haven’t tried MATLAB yet, I am wondering if it could be a better alternative for extracting data from .DTA files and uploading it to a server.

My Questions:

Has anyone successfully processed AEwin .DTA files in MATLAB? If so, what functions or toolboxes are recommended for this task?

Is there a way to automate this process fully (i.e., automatically upload the data to a web server whenever a new .DTA file is created)?

Any suggestions or examples of how to integrate this workflow between AEwin and a server would be greatly appreciated.

Thank you in advance for any help or suggestions you can offer! Hello everyone,

I am working on automating the upload of Acoustic Emission data collected via AEwin, which stores data in .DTA format. My goal is to:

Automatically process the .DTA files generated by AEwin.

Extract key data from these .DTA files.

Upload this data to a web server (such as ThingSpeak or a custom server) in real-time.

What I Have Tried:

Python: I’ve tried reading the .DTA file using the MistrasDTA Python library, and encountered the error.

MATLAB: While I haven’t tried MATLAB yet, I am wondering if it could be a better alternative for extracting data from .DTA files and uploading it to a server.

My Questions:

Has anyone successfully processed AEwin .DTA files in MATLAB? If so, what functions or toolboxes are recommended for this task?

Is there a way to automate this process fully (i.e., automatically upload the data to a web server whenever a new .DTA file is created)?

Any suggestions or examples of how to integrate this workflow between AEwin and a server would be greatly appreciated.

Thank you in advance for any help or suggestions you can offer! .dta file processing, aewin, data automation, thingspeak, python matlab integration MATLAB Answers — New Questions

How can i create a new table by combining the rows with identical names but with fifferent data under the same variables?

I have two large tables and they have the same column 1 and variables. The only thing different is the data. Now what i want to be able to do is to combine identical rows make a new chart were they all appear grouped. I want to then do a t test.I have two large tables and they have the same column 1 and variables. The only thing different is the data. Now what i want to be able to do is to combine identical rows make a new chart were they all appear grouped. I want to then do a t test. I have two large tables and they have the same column 1 and variables. The only thing different is the data. Now what i want to be able to do is to combine identical rows make a new chart were they all appear grouped. I want to then do a t test. tables MATLAB Answers — New Questions

My Excel with links get freeze for minutes

I have an Excel file with links and many formulas, the formulas are not making slow the excel sheet, I can work with it very fluid but for a while gets freeze for 5-6 mins.

I am working with files in a lan network.

I’ve tried to deactivate automatic refresh from workbook links and also in trust center, external content, disable automatic update of workbook links but still the same.

My solution is to update the values, remove the links, save as in another file and then work.

Does anybody know the reason of why excel is getting freeze(not answering) for some minutes and then ok?

I have an Excel file with links and many formulas, the formulas are not making slow the excel sheet, I can work with it very fluid but for a while gets freeze for 5-6 mins. I am working with files in a lan network. I’ve tried to deactivate automatic refresh from workbook links and also in trust center, external content, disable automatic update of workbook links but still the same. My solution is to update the values, remove the links, save as in another file and then work. Does anybody know the reason of why excel is getting freeze(not answering) for some minutes and then ok? Read More

How to Access Your Android Phone Files from File Explorer on Windows 11 wirelessly

Hi,

I read an article that with build 24H2 Windows 11 it would be possible to access Android phone Files from File Explorer on Windows 11 wirelessly.

However, this functionality does not appear in the system settings as described here :

I use Insider Preview so I suppose it’s still in its test phase ?

It would really be nice to implement this.

Best regards,

Mario.

Hi, I read an article that with build 24H2 Windows 11 it would be possible to access Android phone Files from File Explorer on Windows 11 wirelessly. However, this functionality does not appear in the system settings as described here : Hoe u toegang krijgt tot uw Android-telefoonbestanden vanuit Verkenner op Windows 11 – TWCB (NL) (thewindowsclub.blog) I use Insider Preview so I suppose it’s still in its test phase ? It would really be nice to implement this. Best regards, Mario. Read More

SUMIFS on criteria range with leading zero in text

A B

1 ‘006 1

2 ’06 2

3 ‘6 4

4

5 Total =SUMIFS(B1:B3, A1:A3, “06”)

The formula result of =SUMIFS(B1:B3, A1:A3, “06”) is 7, while it should be 2…

The same problem with COUNTSIFS.

A possible tweak to the wrong result, is to add a letter in front of the leading zero texts (eg. “V006”, “V06” and “V6” in the range A1:A3).

A B1 ‘006 12 ’06 23 ‘6 445 Total =SUMIFS(B1:B3, A1:A3, “06”) The formula result of =SUMIFS(B1:B3, A1:A3, “06”) is 7, while it should be 2… The same problem with COUNTSIFS. A possible tweak to the wrong result, is to add a letter in front of the leading zero texts (eg. “V006”, “V06” and “V6” in the range A1:A3). Read More

SharePoint Audience Targeting – Groups

Hi.

Can i use Main-enabled security groups for Audience targeting?

I tried many months ago and it worked.

Now we created a new MESGroup to target some menu links, but it is not working and don’t know why.

Has anything change in the last few months?

Thank you

Hi.Can i use Main-enabled security groups for Audience targeting?I tried many months ago and it worked.Now we created a new MESGroup to target some menu links, but it is not working and don’t know why.Has anything change in the last few months? Thank you Read More

How to solve Action Timed Out error?

Hi all,

We have a pipeline scheduled to run at night and there have been many timed out errors. The dataflow usually only takes only 7 minutes but it failed after 2 hours by timing out. But it is sucessful when re-ran and the dataflow took only 6 min 45 sec. I can only see below details.

The dataflow has source as table in databricks and target as synapse. Source has 198K records and target has 82.1M. What can be the cause?

Hi all, We have a pipeline scheduled to run at night and there have been many timed out errors. The dataflow usually only takes only 7 minutes but it failed after 2 hours by timing out. But it is sucessful when re-ran and the dataflow took only 6 min 45 sec. I can only see below details. The dataflow has source as table in databricks and target as synapse. Source has 198K records and target has 82.1M. What can be the cause? Read More

Using Graph API send individual message on Teams

Dear Team,

I need to send Microsoft Teams message individual using python and below is code.

I am getting below error

Error sending message: 405 {“error”:{“code”:”Request_BadRequest”,”message”:”Specified HTTP method is not allowed for the request target.”

Dear Team, I need to send Microsoft Teams message individual using python and below is code. import requests# Step 1: Obtain Access Tokentenant_id = ‘code’client_id = ‘code’client_secret = ‘code’# Token endpointtoken_url = f’https://login.microsoftonline.com/{tenant_id}/oauth2/v2.0/token’token_headers = {‘Content-Type’: ‘application/x-www-form-urlencoded’}# Token request datatoken_data = { ‘grant_type’: ‘client_credentials’, ‘client_id’: client_id, ‘client_secret’: client_secret, ‘scope’: ‘https://graph.microsoft.com/.default’}# Request tokentoken_response = requests.post(token_url, headers=token_headers, data=token_data)if token_response.status_code == 200: access_token = token_response.json().get(‘access_token’) print(“Access token obtained:”, access_token) # Step 2: Send Message user_id = ’email address removed for privacy reasons’ # Replace with recipient’s ID or email message_url = f’https://graph.microsoft.com/v1.0/users/{user_id}/chatMessages’ message_body = { “body”: { “content”: “Hello! This is an automated message.” } } message_headers = { ‘Authorization’: f’Bearer {access_token}’, ‘Content-Type’: ‘application/json’ } # Send message message_response = requests.post(message_url, json=message_body, headers=message_headers) if message_response.status_code == 201: print(“Message sent successfully!”) else: print(“Error sending message:”, message_response.status_code, message_response.text)else: print(“Error obtaining token:”, token_response.status_code, token_response.text) I am getting below errorError sending message: 405 {“error”:{“code”:”Request_BadRequest”,”message”:”Specified HTTP method is not allowed for the request target.” Read More

Custom Policy- Reusable Logs Templates

Reusable Templates for Sending Logs to Log Analytics Workspace

Challenge:

When it comes to developing custom policies for sending logs to a Log Analytics workspace for any Azure resource, there are multiple approaches one can take. However, if a customer wants a custom policy for all Azure resources in their environment, simply copying and pasting the same policy definition can lead to issues. We might miss copying it fully, or the way it works for one Azure resource to another may vary, requiring us to change the logic accordingly.

Solution:

To address above mentioned challenge, we can define standardized templates that can be reused, with specific parts of the template being modified as per the Azure product where it needs to be applied. This approach ensures consistency and reduces the risk of errors.

Below are two different templates created with this in mind. If Template 1 does not work, then Template 2 can be reused and tested accordingly.

Template 1 (Basic)

This Template is a basic template which can be used for majority of the Azure Resources where we have the option to enable diagnostic settings. If this template does not work, then please proceed with Template 2 as defined in this document.

Reference For Template 1:

{

“name”: “(Name of Policy)”,

“type”: “Microsoft.Authorization/policyDefinitions”,

“apiVersion”: “2022-09-01”,

“scope”: null,

“properties”: {

“displayName”: “(Policy Display name )”,

“policyType”: “Custom”,

“description”: “(policy description)”,

“mode”: “All”,

“metadata”: {

“version”: “1.0.0”,

“category”: “(as per product)”

},

“parameters”: {

“effect”: {

“type”: “String”,

“metadata”: {

“displayName”: “Effect”,

“description”: “Enable or disable the execution of the policy”

},

“allowedValues”: [

“DeployIfNotExists”,

“AuditIfNotExists”,

“Disabled”

],

“defaultValue”: “DeployIfNotExists”

},

“profileNameLAW”: {

“type”: “String”,

“metadata”: {

“displayName”: “Profile name”,

“description”: “The diagnostic settings profile name”

},

“defaultValue”: “setbypolicyLAW”

},

“logAnalytics”: {

“type”: “String”,

“metadata”: {

“displayName”: “Log Analytics workspace”,

“description”: “Select Log Analytics workspace from dropdown list. “,

“strongType”: “omsWorkspace”,

“assignPermissions”: true

}

},

“logsEnabledLAWBoolean”: {

“type”: “Boolean”,

“metadata”: {

“displayName”: “Enable logs”,

“description”: “Whether to enable logs stream to the Log Analytics workspace – True or False”

},

“allowedValues”: [

true,

false

],

“defaultValue”: true

},

“metricsEnabledLAWBoolean”: {

“type”: “Boolean”,

“metadata”: {

“displayName”: “Enable logs”,

“description”: “Whether to enable logs stream to the Log Analytics workspace – True or False”

},

“allowedValues”: [

true,

false

],

“defaultValue”: true

},

“evaluationDelay”: {

“type”: “String”,

“metadata”: {

“displayName”: “Evaluation Delay”,

“description”: “Specifies when the existence of the related resources should be evaluated. The delay is only used for evaluations that are a result of a create or update resource request. Allowed values are AfterProvisioning, AfterProvisioningSuccess, AfterProvisioningFailure, or an ISO 8601 duration between 0 and 360 minutes.”

},

“defaultValue”: “AfterProvisioning”

}

},

“policyRule”: {

“if”: {

“field”: “type”,

“equals”: “(please put resource type here)”

},

“then”: {

“effect”: “[parameters(‘effect’)]”,

“details”: {

“type”: “Microsoft.Insights/diagnosticSettings”,

“evaluationDelay”: “[parameters(‘evaluationDelay’)]”,

“name”: “[parameters(‘profileNameLAW’)]”,

“existenceCondition”: {

“allOf”: [

{

“field”: “Microsoft.Insights/diagnosticSettings/logs[*].enabled”,

“equals”: “[parameters(‘logsEnabledLAWBoolean’)]”

},

{

“field”: “Microsoft.Insights/diagnosticSettings/metrics[*].enabled”,

“equals”: “[parameters(‘metricsEnabledLAWBoolean’)]”

},

{

“field”: “Microsoft.Insights/diagnosticSettings/workspaceId”,

“equals”: “[parameters(‘logAnalytics’)]”

}

]

},

“roleDefinitionIds”: [

“/providers/microsoft.authorization/roleDefinitions/749f88d5-cbae-40b8-bcfc-e573ddc772fa”,

“/providers/microsoft.authorization/roleDefinitions/92aaf0da-9dab-42b6-94a3-d43ce8d16293”

],

“deployment”: {

“properties”: {

“mode”: “incremental”,

“template”: {

“$schema”: “http://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#”,

“contentVersion”: “1.0.0.0”,

“parameters”: {

“resourceName”: {

“type”: “string”

},

“location”: {

“type”: “string”

},

“logAnalytics”: {

“type”: “string”

},

“logsEnabledLAWBoolean”: {

“type”: “bool”

},

“metricsEnabledLAWBoolean”: {

“type”: “bool”

},

“profileNameLAW”: {

“type”: “string”

}

},

“variables”: {},

“resources”: [

{

“type”: “(please put resource type here)/providers/diagnosticSettings”,

“apiVersion”: “2021-05-01-preview”,

“name”: “[concat(parameters(‘resourceName’), ‘/’, ‘Microsoft.Insights/’, parameters(‘profileNameLAW’))]”,

“location”: “[parameters(‘location’)]”,

“dependsOn”: [],

“properties”: {

“workspaceId”: “[parameters(‘logAnalytics’)]”,

“logs”: [

{

“categoryGroup”: “allLogs”,

“enabled”: “[parameters(‘logsEnabledLAWBoolean’)]”

}

],

“metrics”: [

{

“category”: “AllMetrics”,

“enabled”: “[parameters(‘metricsEnabledLAWBoolean’)]”

}

]

}

}

],

“outputs”: {}

},

“parameters”: {

“location”: {

“value”: “[field(‘location’)]”

},

“resourceName”: {

“value”: “[field(‘fullName’)]”

},

“logAnalytics”: {

“value”: “[parameters(‘logAnalytics’)]”

},

“logsEnabledLAWBoolean”: {

“value”: “[parameters(‘logsEnabledLAWBoolean’)]”

},

“metricsEnabledLAWBoolean”: {

“value”: “[parameters(‘metricsEnabledLAWBoolean’)]”

},

“profileNameLAW”: {

“value”: “[parameters(‘profileNameLAW’)]”

}

}

}

}

}

}

}

}

}

Use Case for Template 1

We will use Application Insights for testing the above template and see how it works

Step 1: Create Policy Definition in Azure Portal, Provide Subscription and policy name

Step 2: Copy the template and add it under policy rule inside Policy Definition

Step 3: Please update Policy name, Resource type as given in template wherever applicable and then save the policy

Step 4: Once it is saved, click on assign, and assign the policy.

Step 5: Now, check the compliance report and see if resource is marked as complaint or non- compliant and take necessary actions accordingly.

Template 2(With Count Variable)

In certain scenarios, especially when dealing with compliance issues, the basic template (Template 1) may not be sufficient. One common issue you might encounter is misleading compliance results, where Azure resources return ambiguous or incorrect compliance states like [true, false]. This inconsistency can make it difficult to correctly assess resource compliance, especially in environments with a large number of Azure resources.

To mitigate this, we introduce Template 2, which utilizes a count variable to provide a more accurate and reliable logging mechanism for sending logs to your Log Analytics Workspace. By including a count variable, this template ensures that compliance statuses are clearly and correctly represented in your logs, eliminating false or misleading messages.

Reference For Template 2:

{

“name”: “(Name of Policy)”,

“type”: “Microsoft.Authorization/policyDefinitions”,

“apiVersion”: “2022-09-01”,

“scope”: null,

“properties”: {

“displayName”: “(Policy Display Name)”,

“policyType”: “Custom”,

“description”: “(policy description )”,

“mode”: “All”,

“metadata”: {

“version”: “1.0.0”,

“category”: “(as per product)”

},

“parameters”: {

“profileNameLAW”: {

“type”: “String”,

“metadata”: {

“displayName”: “Profile name”,

“description”: “The diagnostic settings profile name”

},

“defaultValue”: “setbypolicyLAW”

},

“evaluationDelay”: {

“type”: “String”,

“metadata”: {

“displayName”: “Evaluation Delay”,

“description”: “Specifies when the existence of the related resources should be evaluated. The delay is only used for evaluations that are a result of a create or update resource request. Allowed values are AfterProvisioning, AfterProvisioningSuccess, AfterProvisioningFailure, or an ISO 8601 duration between 0 and 360 minutes.”

},

“defaultValue”: “AfterProvisioning”

},

“effect”: {

“type”: “String”,

“metadata”: {

“displayName”: “Effect”,

“description”: “Enable or disable the execution of the policy”

},

“allowedValues”: [

“DeployIfNotExists”,

“AuditIfNotExists”,

“Disabled”

],

“defaultValue”: “DeployIfNotExists”

},

“logAnalytics”: {

“type”: “String”,

“metadata”: {

“displayName”: “Log Analytics workspace”,

“description”: “Select the Log Analytics workspace from dropdown list”,

“strongType”: “omsWorkspace”,

“assignPermissions”: true

}

},

“metricsEnabledLAWBoolean”: {

“type”: “Boolean”,

“metadata”: {

“displayName”: “Enable metrics”,

“description”: “Whether to enable metrics stream to the Log Analytics workspace – True or False”

},

“allowedValues”: [

true,

false

],

“defaultValue”: true

},

“logsEnabledLAWBoolean”: {

“type”: “Boolean”,

“metadata”: {

“displayName”: “Enable logs”,

“description”: “Whether to enable logs stream to the Log Analytics workspace – True or False”

},

“allowedValues”: [

true,

false

],

“defaultValue”: true

}

},

“policyRule”: {

“if”: {

“field”: “type”,

“equals”: “(please put resource type here)”

},

“then”: {

“effect”: “[parameters(‘effect’)]”,

“details”: {

“type”: “Microsoft.Insights/diagnosticSettings”,

“name”: “[parameters(‘profileNameLAW’)]”,

“evaluationDelay”: “[parameters(‘evaluationDelay’)]”,

“roleDefinitionIds”: [

“/providers/Microsoft.Authorization/roleDefinitions/b24988ac-6180-42a0-ab88-20f7382dd24c”

],

“existenceCondition”: {

“allOf”: [

{

“count”: {

“field”: “Microsoft.Insights/diagnosticSettings/logs[*]”,

“where”: {

“allOf”: [

{

“field”: “Microsoft.Insights/diagnosticSettings/logs[*].enabled”,

“equals”: “true”

},

{

“field”: “microsoft.insights/diagnosticSettings/logs[*].categoryGroup”,

“equals”: “allLogs”

}

]

}

},

“greaterorEquals”: 1

},

{

“field”: “Microsoft.Insights/diagnosticSettings/metrics[*].enabled”,

“equals”: “[parameters(‘metricsEnabledLAWBoolean’)]”

}

]

},

“deployment”: {

“properties”: {

“mode”: “incremental”,

“template”: {

“$schema”: “https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#”,

“contentVersion”: “1.0.0.0”,

“parameters”: {

“resourceName”: {

“type”: “string”

},

“logAnalytics”: {

“type”: “string”

},

“logsEnabledLAWBoolean”: {

“type”: “bool”

},

“metricsEnabledLAWBoolean”: {

“type”: “bool”

},

“profileNameLAW”: {

“type”: “string”

},

“location”: {

“type”: “string”

}

},

“variables”: {},

“resources”: [

{

“type”: “(please put resource type here)/providers/diagnosticSettings”,

“apiVersion”: “2021-05-01-preview”,

“name”: “[concat(parameters(‘resourceName’), ‘/’, ‘Microsoft.Insights/’, parameters(‘profileName’))]”,

“location”: “[parameters(‘location’)]”,

“dependsOn”: [],

“properties”: {

“workspaceId”: “[parameters(‘logAnalytics’)]”,

“metrics”: [

{

“category”: “AllMetrics”,

“timeGrain”: null,

“enabled”: “[parameters(‘metricsEnabledLAWBoolean’)]”

}

],

“logs”: [

{

“categoryGroup”: “allLogs”,

“enabled”: “[parameters(‘logsEnabledLAWBoolean’)]”

}

]

}

}

],

“outputs”: {}

},

“parameters”: {

“profileNameLAW”: {

“value”: “[parameters(‘profileNameLAW’)]”

},

“logAnalytics”: {

“value”: “[parameters(‘logAnalytics’)]”

},

“metricsEnabledLAWBoolean”: {

“value”: “[parameters(‘metricsEnabledLAWBoolean’)]”

},

“logsEnabledLAWBoolean”: {

“value”: “[parameters(‘logsEnabledLAWBoolean’)]”

},

“location”: {

“value”: “[field(‘location’)]”

},

“resourceName”: {

“value”: “[field(‘name’)]”

}

}

}

}

}

}

}

}

}

Use Case for Template 2

We will use Function Apps for testing the above template because if you use template 1 you will see issues in compliance reasons

Step 1: Create Policy Definition in Azure Portal, Provide Subscription and policy name

Step 2: Copy the template and add it under policy rule inside Policy Definition

Step 3: Please update Policy name, Resource type as given in template wherever applicable and then save the policy

Step 4: Once it is saved, click on assign, and assign the policy.

Step 5: Now, check the compliance report and see if resource is marked as complaint or non- compliant and take necessary actions accordingly.

Microsoft Tech Community – Latest Blogs –Read More

Azure Kubernetes Service Baseline – The Hard Way, Part Deux

Azure Kubernetes Service Workload Protection

The objective of this blog is to provide a concise guide on how to increase the security of your Kubernetes workloads by implementing “Workload Identity” and network policy in AKS. These features enable secure access to Azure KeyVault and control traffic flow between pods, providing an additional layer of security for your AKS cluster. Finally, as the icing on the cake, there are instructions on how to use Defender for Containers to detect vulnerabilities in container images.

By following the steps in this guide, you will learn how to configure workload identity, network policy in AKS and Azure KeyVault.

Preconditions

This post build on a previous blog, https://techcommunity.microsoft.com/t5/apps-on-azure-blog/azure-kubernetes-service-baseline-the-hard-way/ba-p/4130496. The instructions there will equip you with a private AKS cluster and a lot of surrounding services, like Azure Container Reqistry, Application Gateway with WAF, etc. If you have completed the instructions there, you can simply continue with this guide.

You could also create a more simple AKS cluster, using Microsoft quickstart guides here: https://learn.microsoft.com/en-us/azure/aks/tutorial-kubernetes-prepare-app?tabs=azure-cli. However, if you do you will have to take care to populate some environment variables to match the instructions below, like e.g. AKS_CLUSTER_NAME and STUDENT_NAME.

1.1 Deployment

First, create some environment variables, to make life easier.

1.1.1 Prepare Environment Variables for Infrastructure

[!Note] The Azure KeyVault name is a global name that must be unique.

FRONTEND_NAMESPACE=”frontend”

BACKEND_NAMESPACE=”backend”

SERVICE_ACCOUNT_NAME=”workload-identity-sa”

SUBSCRIPTION=”$(az account show –query id –output tsv)”

USER_ASSIGNED_IDENTITY_NAME=”keyvaultreader”

FEDERATED_IDENTITY_CREDENTIAL_NAME=”keyvaultfederated”

KEYVAULT_NAME=”<DEFINE A KEYVAULT NAME HERE>”

KEYVAULT_SECRET_NAME=”redissecret”

1.1.2 Update AKS Cluster with OIDC Issuer

Enable the existing cluster to use OpenID connect (OIDC) as an authentication protocol for Kubernetes API server (unless already done). This allows the cluster to integrate with Microsoft Entra ID and other identity providers that support OIDC.

az aks update -g $SPOKE_RG -n $AKS_CLUSTER_NAME–${STUDENT_NAME} –enable-oidc-issuer

Get the OICD issuer URL. Query the AKS cluster for the OICD issuer URL with the following command, which stores the reult in an environment variable.

AKS_OIDC_ISSUER=“$(az aks show -n $AKS_CLUSTER_NAME-${STUDENT_NAME} -g $SPOKE_RG –query “oidcIssuerProfile.issuerUrl” -otsv)“

The variable should contain the Issuer URL similar to the following: https://eastus.oic.prod-aks.azure.com/9e08065f-6106-4526-9b01-d6c64753fe02/9a518161-4400-4e57-9913-d8d82344b504/

1.1.3 Create Azure KeyVault

Create the Azure Keyvault instance. When creating the Keyvault, use “deny as a default” action for the network access policy, which means that only the specified IP addresses or virtual networks can access the key vault.

Your bastion host will be allowed, so use that one when you interact with Keyvault later.

az keyvault create -n $KEYVAULT_NAME -g $SPOKE_RG -l $LOCATION –default-action deny

Create a private DNS zone for the Azure Keyvault.

az network private-dns zone create –resource-group $SPOKE_RG –name privatelink.vaultcore.azure.net

Link the Private DNS Zone to the HUB and SPOKE Virtual Network

az network private-dns link vnet create –resource-group $SPOKE_RG –virtual-network $HUB_VNET_ID –zone-name privatelink.vaultcore.azure.net –name hubvnetkvdnsconfig –registration-enabled false

az network private-dns link vnet create –resource-group $SPOKE_RG –virtual-network $SPOKE_VNET_NAME –zone-name privatelink.vaultcore.azure.net –name spokevnetkvdnsconfig –registration-enabled false

Create a private endpoint for the Keyvault

First we need to obtain the KeyVault ID in order to deploy the private endpoint.

KEYVAULT_ID=$(az keyvault show –name $KEYVAULT_NAME

–query ‘id’ –output tsv)

Create the private endpoint in endpoint subnet.

az network private-endpoint create –resource-group $SPOKE_RG –vnet-name $SPOKE_VNET_NAME –subnet $ENDPOINTS_SUBNET_NAME –name KVPrivateEndpoint –private-connection-resource-id $KEYVAULT_ID –group-ids vault –connection-name PrivateKVConnection –location $LOCATION

Fetch IP of the private endpoint and create an A record in the private DNS zone.

Obtain the IP address of the private endpoint NIC card.

KV_PRIVATE_IP=$(az network private-endpoint show -g $SPOKE_RG -n KVPrivateEndpoint

–query ‘customDnsConfigs[0].ipAddresses[0]’ –output tsv)

Note the private IP address down.

echo $KV_PRIVATE_IP

Create the A record in DNS zone and point it to the private endpoint IP of the Keyvault.

az network private-dns record-set a create

–name $KEYVAULT_NAME

–zone-name privatelink.vaultcore.azure.net

–resource-group $SPOKE_RG

Point the A record to the private endpoint IP of the Keyvault.

az network private-dns record-set a add-record -g $SPOKE_RG -z “privatelink.vaultcore.azure.net” -n $KEYVAULT_NAME -a $KV_PRIVATE_IP

Validate your deployment in the Azure portal.

Navigate to the Azure portal at https://portal.azure.com and enter your login credentials.

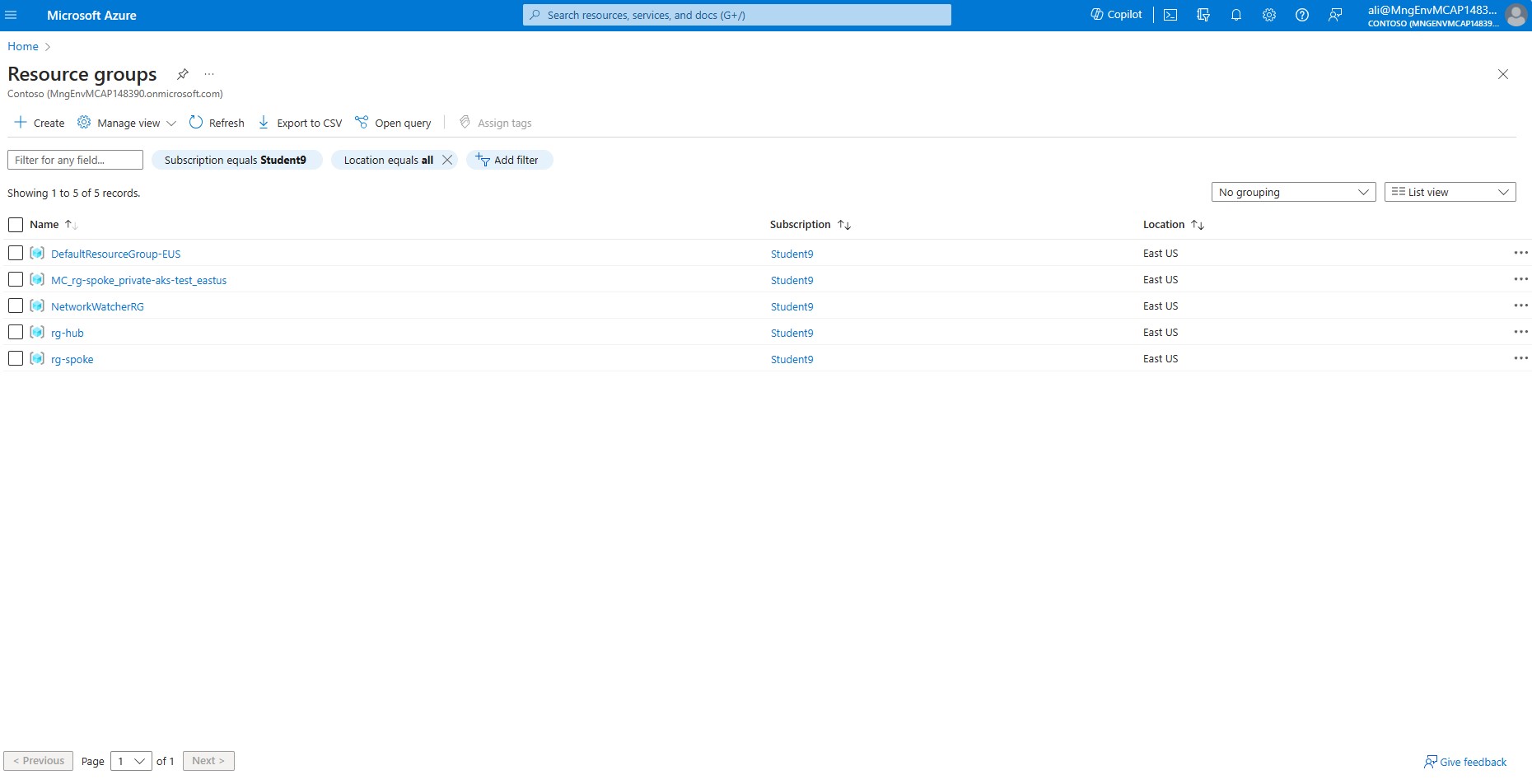

Once logged in, click on Resource groups to view all of your resource groups in your subscription. You should see the 3 Resouce groups created during previous steps: MC_rg-spoke_private-aks-xxxx_eastus, rg-hub and rg-spoke.

Select rg-spoke

Verify that the following resources exists: an Azure KeyVault instance, KeyVault private endpoint, Private endpoint Network interface, and a private DNS zone called privatelink.vaultcore.azure.net.

Select the Private DNS zone, called privatelink.vaultcore.azure.net.

On your left hand side menu, under Settings click on Virtual network links.

Validate that there is a link name called hubnetdnsconfig and the link status is set to Completed and the virtual network is set to Hub_VNET, ensure you have a link to both the hub and also the spoke, the link status should be set to completed.

On the top menu, click on Home. Select Resource Groups then click on the resource group called rg-hub.

Select Jumpbox VM called Jumpbox-VM.

In the left-hand side menu, under the Connect section, select ‘Bastion’. Enter the credentials for the Jumpbox VM and verify that you can log in successfully.

In the Jumpbox VM command line type the following command and ensure it returns the private ip address of the private endpoint.

dig <KEYVAULT NAME>.vault.azure.net

Example output:

azureuser@Jumpbox-VM:~$ dig alibengtssonkeyvault.vault.azure.net

; <<>> DiG 9.18.12-0ubuntu0.22.04.3-Ubuntu <<>> alibengtssonkeyvault.vault.azure.net

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 22014

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 65494

;; QUESTION SECTION:

;alibengtssonkeyvault.vault.azure.net. IN A

;; ANSWER SECTION:

alibengtssonkeyvault.vault.azure.net. 60 IN CNAME alibengtssonkeyvault.privatelink.vaultcore.azure.net.

alibengtssonkeyvault.privatelink.vaultcore.azure.net. 1800 IN A10.1.2.6

;; Query time: 12 msec

;; SERVER: 127.0.0.53#53(127.0.0.53) (UDP)

;; WHEN: Sun Oct 08 16:41:05 UTC 2023

;; MSG SIZE rcvd: 138

Now, you should have an infrastructure that looks like this:

1.1.4 Add a Secret to Azure KeyVault

We have successfully created an instance of Azure KeyVault with a private endpoint and set up a private DNS zone to resolve the Azure KeyVault instance from the hub and spoke using Virtual Network links. Additionally, we have updated our AKS cluster to support OIDC, enabling workload identity.

The next step is to add a secret to Azure KeyVault instance.

[!IMPORTANT] Because the Azure KeyVault is isolated in a VNET, you need to access it from the Jumpbox VM. Please log in to the Jumpbox VM, and set a few environment variables (or load all environment variables you stored in a file):

SPOKE_RG=rg-spoke

LOCATION=eastus

FRONTEND_NAMESPACE=”frontend”

BACKEND_NAMESPACE=”backend”

SERVICE_ACCOUNT_NAME=”workload-identity-sa”

SUBSCRIPTION=”$(az account show –query id –output tsv)”

USER_ASSIGNED_IDENTITY_NAME=”keyvaultreader”

FEDERATED_IDENTITY_CREDENTIAL_NAME=”keyvaultfederated”

KEYVAULT_SECRET_NAME=”redissecret”

AKS_CLUSTER_NAME=private-aks

KEYVAULT_NAME=<WRITE YOUR KEYVAULT NAME HERE>

STUDENT_NAME=<WRITE YOUR STUDENT NAME HERE>

ACR_NAME=<NAME OF THE AZURE CONTAINER REGISTRY>

From the Jumpbox VM create a secret in the Azure KeyVault. This is the secret that will be used by the frontend application to connect to the (redis) backend.

az keyvault secret set –vault-name $KEYVAULT_NAME –name $KEYVAULT_SECRET_NAME –value ‘redispassword’

1.1.5 Add the KeyVault URL to the Environment Variable KEYVAULT_URL

export KEYVAULT_URL=“$(az keyvault show -g $SPOKE_RG -n $KEYVAULT_NAME –query properties.vaultUri -o tsv)“

1.1.6 Create a managed identity and grant permissions to access the secret

Create a User Managed Identity. We will give this identity GET access to the keyvault, and later associate it with a Kubernetes service account.

az account set –subscription $SUBSCRIPTION

az identity create –name $USER_ASSIGNED_IDENTITY_NAME –resource-group $SPOKE_RG –location $LOCATION –subscription $SUBSCRIPTION

Set an access policy for the managed identity to access the Key Vault.

export USER_ASSIGNED_CLIENT_ID=“$(az identity show –resource-group $SPOKE_RG –name $USER_ASSIGNED_IDENTITY_NAME –query ‘clientId’ -otsv)“

az keyvault set-policy –name $KEYVAULT_NAME –secret-permissions get –spn $USER_ASSIGNED_CLIENT_ID

1.1.7 Connect to the Cluster

First, connect to the cluster if not already connected

az aks get-credentials -n $AKS_CLUSTER_NAME–${STUDENT_NAME} -g $SPOKE_RG

1.1.8 Create Service Account

The service account should exist in the frontend namespace, because it’s the frontend service that will use that service account to get the credentials to connect to the (redis) backend service.

[!Note] Instead of creating kubenetes manifest files, we will create them on the command line like below. In a real life case, you would create manifest files and store them in a version control system, like git.

First create the frontend namespace

cat <<EOF | kubectl apply -f –

apiVersion: v1

kind: Namespace

metadata:

name: $FRONTEND_NAMESPACE

labels:

name: $FRONTEND_NAMESPACE

EOF

Verify that the namespace called frontend has been created.

kubectl get ns

Then create a service account in that namespace. Notice the annotation for workload identity

cat <<EOF | kubectl apply -f –

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: $FRONTEND_NAMESPACE

annotations:

azure.workload.identity/client-id: $USER_ASSIGNED_CLIENT_ID

name: $SERVICE_ACCOUNT_NAME

EOF

1.1.9 Establish Federated Identity Credential

In this step we connect the Kubernetes service account with the user defined managed identity in Azure, using a federated credential.

az identity federated-credential create –name $FEDERATED_IDENTITY_CREDENTIAL_NAME –identity-name $USER_ASSIGNED_IDENTITY_NAME –resource-group $SPOKE_RG –issuer $AKS_OIDC_ISSUER –subject system:serviceaccount:$FRONTEND_NAMESPACE:$SERVICE_ACCOUNT_NAME

1.1.10 Build the Application

Now its time to build the application. In order to do so, first clone the applications repository:

git clone https://github.com/pelithne/az-vote-with-workload-identity.git

In order to push images, you may have to login to the registry first using your Azure AD identity:

az acr login –name $ACR_NAME

Then run the following commands to build, tag and push your container image to the Azure Container Registry

cd az-vote-with-workload-identity

cd azure-vote

docker build -t azure-vote-front:v1 .

docker tag azure-vote-front:v1 $ACR_NAME.azurecr.io/azure-vote-front:v1

docker push $ACR_NAME.azurecr.io/azure-vote-front:v1

The string after : is the image tag. This can be used to manage versions of your app, but in this case we will only have one version.

1.1.11 Deploy the Application

We want to create some separation between the frontend and backend, by deploying them into different namespaces. Later we will add more separation by introducing network policies in the cluster to allow/disallow traffic between specific namespaces.

First, create the backend namespace

[!Note] Instead of creating kubernetes manifest, we put them inline for convenience. Feel free to create yaml-manifests instead if you like.

cat <<EOF | kubectl apply -f –

apiVersion: v1

kind: Namespace

metadata:

name: backend

labels:

name: backend

EOF

Verify that the namespace called backend has been created.

kubectl get ns

Then create the Backend application, which is a Redis store which we will use as a “database”. Notice how we inject a password to Redis using an environment variable (not best practice obviously, but for simplicity).

cat <<EOF | kubectl apply -f –

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-back

namespace: $BACKEND_NAMESPACE

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-back

template:

metadata:

labels:

app: azure-vote-back

spec:

nodeSelector:

“kubernetes.io/os”: linux

containers:

– name: azure-vote-back

image: mcr.microsoft.com/oss/bitnami/redis:6.0.8

ports:

– containerPort: 6379

name: redis

env:

– name: REDIS_PASSWORD

value: “redispassword”

—

apiVersion: v1

kind: Service

metadata:

name: azure-vote-back

namespace: $BACKEND_NAMESPACE

spec:

ports:

– port: 6379

selector:

app: azure-vote-back

EOF

Then create the frontend. We already created the frontend namespace in an earlier step, so ju go ahead and create the frontend app in the frontend namespace.

[!Note] A few things worh noting: azure.workload.identity/use: “true” – This is a label that tells AKS that workload identity should be used serviceAccountName: $SERVICE_ACCOUNT_NAME – Specifies that this resource is connected to the service account created earlier image: $ACR_NAME.azurecr.io/azure-vote:v1 – The image with the application built in a previous step. service.beta.kubernetes.io/azure-load-balancer-ipv4: $ILB_EXT_IP – This “hard codes” the IP address of the internal LB to match what was previously configured in App GW as backend.

cat <<EOF | kubectl apply -f –

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-front

namespace: $FRONTEND_NAMESPACE

labels:

azure.workload.identity/use: “true”

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-front

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

minReadySeconds: 5

template:

metadata:

labels:

app: azure-vote-front

azure.workload.identity/use: “true”

spec:

serviceAccountName: $SERVICE_ACCOUNT_NAME

nodeSelector:

“kubernetes.io/os”: linux

containers:

– name: azure-vote-front

image: $ACR_NAME.azurecr.io/azure-vote-front:v1

ports:

– containerPort: 80

resources:

requests:

cpu: 250m

limits:

cpu: 500m

env:

– name: REDIS

value: “azure-vote-back.backend”

– name: KEYVAULT_URL

value: $KEYVAULT_URL

– name: SECRET_NAME

value: $KEYVAULT_SECRET_NAME

—

apiVersion: v1

kind: Service

metadata:

name: azure-vote-front

namespace: $FRONTEND_NAMESPACE

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: “true”

service.beta.kubernetes.io/azure-load-balancer-internal-subnet: “loadbalancer-subnet”

spec:

type: LoadBalancer

ports:

– port: 80

selector:

app: azure-vote-front

EOF

1.1.12 Validate the Application

To test if the application is working, you can navigate to the URL used before to reach the nginx test application. This time the request will be redirected to the Azure Vote frontend instead. If that works, it means that the Azure Vote frontend pod was able to fetch the secret from Azure KeyVault, and use it when connecting to the backend (Redis) service/pod.

You can also verify in the application logs that the frontend was able to connect to the backend.

To do that, you need to find the name of the pod:

kubectl get pods –namespace frontend

This should give a result timilar to this

NAME READY STATUS RESTARTS AGE

azure-vote-front-85d6c66c4d-pgtw9 1/1 Running 29 (7m3s ago) 3h13m

Now you can read the logs of the application by running this command (but with YOUR pod name)

kubectl logs azure-vote-front-85d6c66c4d-pgtw9 –namespace frontend

You should be able to find a line like this:

Connecting to Redis… azure-vote-back.backend

And then a little later:

Connected to Redis!

1.1.13 Workload Network Policy

The cluster is deployed with Azure network policies. The Network policies can be used to control traffic between resources in Kubernetes.

This first policy will prevent all traffic to the backend namespace.

cat <<EOF | kubectl apply -f –

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-ingress-to-backend

namespace: backend

spec:

podSelector: {}

ingress:

– from:

– podSelector:

matchLabels: {}

EOF

Network policies are applied on new TCP connections, and because the frontend application has already created a persistent TCP connection with the backend it might have to be redeployed for the policy to hit. One way to do that is to simply delete the pod and let it recreate itself:

First find the pod name

kubectl get pods –namespace frontend

This should give a result timilar to this

NAME READY STATUS RESTARTS AGE

azure-vote-front-85d6c66c4d-pgtw9 1/1 Running 29 (7m3s ago) 3h13m

Now delete the pod with the following command (but with YOUR pod name)

kubectl delete pod –namespace frontend azure-vote-front-85d6c66c4d-pgtw9

After the deletion has finished you should be able to se that the “AGE” of the pod has been reset.

kubectl get pods –namespace frontend

NAME READY STATUS RESTARTS AGE

azure-vote-front-85d6c66c4d-9wtgd 1/1 Running 0 25s

You should also find that the frontend can no longer communicate with the backend and that when accessing the URL of the app, it will time out.

Now apply a new policy that allows traffic into the backend namespace from pods that have the label app: azure-vote-front

cat <<EOF | kubectl apply -f –

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-from-frontend

namespace: backend # apply the policy in the backend namespace

spec:

podSelector:

matchLabels:

app: azure-vote-back # select the redis pod

ingress:

– from:

– namespaceSelector:

matchLabels:

name: frontend # allow traffic from the frontend namespace

podSelector:

matchLabels:

app: azure-vote-front # allow traffic from the azure-vote-front pod

EOF

Once again you have to recreate the pod, so that it can establish a connection to the backend service. Or you can simply wait for Kubernetes to attemt to recreate the frontend pod.

First find the pod name

kubectl get pods –namespace frontend

Then delete the pod (using the name of your pod)

kubectl delete pod –namespace frontend azure-vote-front-85d6c66c4d-pgtw9

This time, communication from azure-vote-front to azure-vote-back is allowed.

1.2 Defender for Containers

Finally lets add Defender for Containers to your deployment to harden your environment even further.

Microsoft Defender for Containers is a cloud-native solution that helps you secure your containers and their applications. It protects your Kubernetes clusters from misconfigurations, vulnerabilities, and threats, whether they are running on Azure, AWS, GCP, or on-premises. With Microsoft Defender for Containers, you can:

Harden your environment by continuously monitoring your cluster configurations and applying best practices.

Assess your container images for known vulnerabilities and get recommendations to fix them.

Protect your nodes and clusters from run-time attacks and get alerts for suspicious activities.

Discover your data plane components and get insights into your Kubernetes and environment configuration.

Microsoft Defender for Containers is part of Microsoft Defender for Cloud, a comprehensive security solution that covers your cloud workloads and hybrid environments. You can enable it easily from the Azure portal and start improving your container security today. Learn more about Microsoft Defender for Containers from this article.

During this activity you will:

Scan container image for vulnerabilities.

Simulate a security alert for Kubernetes workload.

Investigate and review vulnerabilities.

Import the metasploit vulnerability emulator docker image from Docker Hub to your Azure container registry.

1.2.1 Prerequisites

Update the firewall to allow AKS to pull images from docker hub.

az network firewall application-rule create –collection-name ‘aksdockerhub’ –firewall-name $FW_NAME -n ‘Allow_Azmon’ –source-addresses ‘*’ –protocols ‘http=80’ ‘https=443’ –target-fqdns “*.docker.io” “auth.docker.io” “registry-1.docker.io” “*.monitor.azure.com” “production.cloudflare.docker.com” –action Allow –priority 103 –resource-group $HUB_RG

Please make sure that Microsoft Defender for Containers is activated on your subscription. Here are the steps you can follow to enable it.

[!IMPORTANT] Before proceeding with this lab exercise, it is necessary to enable Microsoft Defender for Cloud.

Validate Microsoft Defender for Cloud in the Azure portal.

Navigate to the Azure portal at https://portal.azure.com and enter your login credentials.

Type in Microsoft Defender for Cloud in the search field.

From the drop down menu click on Microsoft Defender for Cloud.

Click on Environment settings in the left hand side menu under the Management section.

Expand the Tenant Root Group

On the far right hand side of the subscription click on the three dots to open the context menu.

From the context menu click on Edit settings.

Valiate that Defender CSPM is enabled (toggle button set to On) and that monitoring coverage is set to Full.

Validate that the container plans is enabled (toggle button set to On) and that monitoring coverage is set to Full.

Login to your jumpbox, lets ensure that the Defender security profile is deployed onto your AKS cluster. run the following command:

kubectl get po -n kube-system | grep -i microsoft*

Example output:

azureuser@Jumpbox-VM:~$ kubectl get po -n kube-system | grep -i microsoft*

microsoft-defender-collector-ds-5spnc 2/2 Running 0 79m

microsoft-defender-collector-ds-bqs96 2/2 Running 0 79m

microsoft-defender-collector-ds-fxh7r 2/2 Running 0 79m

microsoft-defender-collector-misc-65b968c857-nvnpk 1/1 Running 0 79m

microsoft-defender-publisher-ds-qzzl8 1/1 Running 0 79m

microsoft-defender-publisher-ds-vk8xl 1/1 Running 0 79m

microsoft-defender-publisher-ds-x6dxw 1/1 Running 0 79m

azureuser@Jumpbox-VM:~$

1.2.2 Generate a Security Alert for Kubernetes Workload

Login to the jumpbox and launch a pod that executes a test command, to simulate a security alert in Microsoft Defender for cloud.

Create a blank file with the following command.

vim mdctest.yaml

In the editor that appears, paste this YAML manifest, which will launch a pod named mdc-test with ubuntu version 18.04 as container image.

apiVersion: v1

kind: Pod

metadata:

name: mdc-test

spec:

containers:

– name: mdc-test

image: ubuntu:18.04

command: [“/bin/sh”]

args: [“-c”, “while true; do echo sleeping; sleep 9600;done”]

Save and quit.

Apply the configuration in the file mdctest.yaml. this will create a Pod in the namespace default.

kubectl apply -f mdctest.yaml

Verify that the Pod is in a running state:

kubectl get pods

Example output:

NAME READY STATUS RESTARTS AGE

mdc-test 1/1 Running 0 8s

Lets run a bash shell inside the container named mdc-test. The bash shell is a common command-line interface that allows you to run various commands and scripts. To do this, we use the following command:

kubectl exec -it mdc-test — bash

We now whant to copy and run the echo binary, which is a program which prints its arguments to the standard output. this is useful for testing the security alert system in Microsoft Defender for Cloud.

Copy the echo binary to a file named asc_alerttest_662jfi039n in the current working directory.

cp /bin/echo ./asc_alerttest_662jfi039n

Execute the echo binary and print “testing eicar pipe” to the standard out.

./asc_alerttest_662jfi039n testing eicar pipe

Example output:

mdc@mdc-test: ./asc_alerttest_662jfi039n testing eicar pipe

testing eicar pipe

Verify that Microsoft defender has triggered a security alert in Microsoft Defender for Cloud.

Go to https://portal.azure.com in your browser and log in if needed.

Once you have successfully logged in to Azure, Type in Microsoft Defender for Cloud in the search field.

From the drop down menu click on Microsoft Defender for Cloud.

On your left hand side menu, under General section click on Security alerts.

You should now see the Security alert, generated by Microsoft Cloud Defender. Click on the Alert.

Review the security assessment by clicking on View full details.

Once you have reviewed the details of the simulated security alert. Put the status of the alert from Active to Resolved. The security alert will dissapear from the security alert list.

Delete the running pod from the AKS cluster

kubectl delete pods mdc-test

1.2.3 Import Vulnerable image to Container Registry

login to your container registry:

az acr login –name <ACR_NAME>

Download the docker image to your jumpbox VM:

docker pull docker.io/vulnerables/metasploit-vulnerability-emulator

Tag the docker image:

docker tag vulnerables/metasploit-vulnerability-emulator $ACR_NAME.azurecr.io/metasploit-vulnerability-emulator

Push the vulnerable docker image to azure container registry.

docker push $ACR_NAME.azurecr.io/metasploit-vulnerability-emulator

1.2.4 Review Microsoft Defender for Containers Recommendations

In this section, you will learn how to review the security recommendations that Defender for Containers generates for your clusters and containers. These recommendations are based on the continuous assessment of your configurations and the comparison with the initiatives applied to your subscriptions. You will also learn how to investigate and remediate the issues that are identified by Defender for Containers.

In the Azure portal, Type in Container registries in the search field.

From the drop down menu click on Container Registries.

Click on your container registry from the list.

On the menu to the left hand side click on Microsoft Defender for Cloud to view your recommendations.

Notice that we have a new recommendation called Container registry image should have vulnerability findings resolved

To learn how to fix the vulnerability, click on view additonal recommendations in Defender for cloud

To get more details and mitigation steps for a specific vulnerability, select it from the list. Microsoft Defender for cloud will guide you to harden your container image.

Select or search for a CVE under the vulnerabilities from the list.

Review the detailed recommendations from Microsoft Defender for Cloud.

Microsoft Tech Community – Latest Blogs –Read More

Radio Butto Protection

Hello everybody,

I would like to protect a worksheet so that it is possible to select radio buttons, but not to move or edit these radio buttons. Is there a way to do this?

It looks like only the opposite is possible – I can protect the fields so that they can be moved and edited, but not selected! That would be absolutely ridiculous, because there is no use case for this constellation!

Thanks for any tips.

Regards

Matthias

Hello everybody,I would like to protect a worksheet so that it is possible to select radio buttons, but not to move or edit these radio buttons. Is there a way to do this?It looks like only the opposite is possible – I can protect the fields so that they can be moved and edited, but not selected! That would be absolutely ridiculous, because there is no use case for this constellation!Thanks for any tips.RegardsMatthias Read More

Monthly news – October 2024

Microsoft Defender XDR

Monthly news

October 2024 Edition

This is our monthly “What’s new” blog post, summarizing product updates and various new assets we released over the past month across our Defender products. In this edition, we are looking at all the goodness from September 2024. Defender for Cloud has it’s own Monthly News post, have a look at their blog space.

Legend:

Product videos

Webcast (recordings)

Docs on Microsoft

Blogs on Microsoft

GitHub

External

Improvements

Previews / Announcements

Unified Security Operations Platform: Microsoft Defender XDR & Microsoft Sentinel

(GA) The global search for entities in the Microsoft Defender portal is now generally available. The enhanced search results page centralizes the results from all entities. For more information, see Global search in the Microsoft Defender portal.

(GA) Copilot in Defender now includes the identity summary capability, providing instant insights into a user’s risk level, sign in activity, and more. For more information, see Summarize identity information with Copilot in Defender. and read our announcement blog.

Detecting browser anomalies to disrupt attacks early. This blog post offers insights into utilizing browser anomalies and malicious sign-in traits to execute attack disruption at the earliest stages, preventing attackers from achieving their objectives.

Microsoft Defender Threat Intelligence customers can now view the latest featured threat intelligence articles in the Microsoft Defender portal home page. The Intel explorer page now also has an article digest that notifies them of the number of new Defender TI articles that were published since they last accessed the Defender portal.

Microsoft Defender XDR Unified RBAC permissions are added to submit inquiries and view responses from Microsoft Defender Experts. You can also view responses to inquires submitted to Ask Defender Experts through your listed email addresses when submitting your inquiry or in the Defender portal by navigating to Reports > Defender Experts messages.

Unlocking Real-World Security: Defending against Crypto mining attacks. Since we integrated cloud workload alerts, signals and asset information from Defender for Cloud into Defender XDR, we’ve seen its transformative impact in real-world scenarios. This integration enhances our ability to detect, investigate, and respond to sophisticated threats across hybrid and multi-cloud environments. This blog post explores a real scenario that showcases the power of this integration.

(GA) Advanced hunting context panes are now available in more experiences. This allows you to access the advanced hunting feature without leaving your current workflow.

For incidents and alerts generated by analytics rules, you can select Run query to explore the results of the related analytics rule.

In the analytics rule wizard’s Set rule logic step, you can select View query results to verify the results of the query you are about to set.

In the query resources report, you can view any of the queries by selecting the three dots on the query row and selecting Open in query editor.

For device entities involved in incidents or alerts, Go hunt is also available as one of the options after selecting the three dots on the device side panel.

Defender for Identity: the critical role of identities in automatic attack disruption. Read this blog post to learn about automatic attack disruption and how important it is to include Defender for Identity in your security strategy.

Microsoft Defender Vulnerability Management

Research Analysis and Guidance: Ensuring Android Security Update Adoption. Microsoft researchers analyzed anonymized and aggregated security patch level data from millions of Android devices enrolled with Microsoft Intune to better understand Android security update availability and adoption across Android device models. In this post, we describe our analysis, and we provide guidance to users and enterprises to keep their devices up to date against discovered vulnerabilities.

Microsoft Security Exposure Management

Ninja Show: In this 2 episodes, we explore Microsoft Security Exposure Management, learning how it quantifies risks, generates reports for key stakeholders, unifies the security stack, and optimizes attack surface management. Join us October 1 and 3 @ 9 AM PT to discover the tools and processes that power proactive risk management, helping organizations stay ahead of evolving threats > https://aka.ms/ninjashow. Recordings can be found on our YouTube playlist.

Microsoft Security Experts

Hunting with Microsoft Graph activity logs. Multiple products and logs are available to help with threat investigation and detection. In this blog post, we’ll explore the recent addition of Microsoft Graph activity logs, which has been made generally available.

Microsoft Defender Experts services are now HIPAA and ISO certified. We are pleased to announce that Microsoft Defender Experts for XDR and Microsoft Defender Experts for Hunting can help healthcare and life science customers in meeting their Health Insurance Portability and Accountability Act (HIPAA) obligations.

Microsoft IR Internship Blog Series “Microsoft Intern Experience – Through the eyes of DART Incident Response (IR) interns”. Interns at Microsoft’s Incident Response (IR) customer-facing business, the Detection and Response Team (DART), gain insight into what’s needed to be a cyber incident response investigator – and experience it first-hand with our team of IR threat hunters.

This blog series is based on interviews with interns about their internship experiences and written from a first-person perspective.

Microsoft Defender for Cloud Apps

(Preview) Enforce Edge in-browser when accessing business apps.

Administrators who understand the power of Microsoft Edge in-browser protection, can now require their users to use Microsoft Edge when accessing corporate resources. A primary reason is security, since the barrier to circumventing session controls using Microsoft Edge is much higher than with reverse proxy technology. Click here for more details.

(Preview) Defender for Cloud Apps now supports connections to Mural accounts using app connector APIs, giving your visibility into and control over your organization’s Mural use.

For more information, see:

How Defender for Cloud Apps helps protect your Mural environment

Connect apps to get visibility and control with Microsoft Defender for Cloud Apps

Mural Help Center (external Link)

Removing the ability to email end users about blocked actions.

Effective October 1st, 2024, we will discontinue the feature that notifies end users via email when their action is blocked by session policies. Admins can no longer configure this setting when creating new session policies. Existing session policies with this setting will not trigger email notifications to end users when a block action occurs. End users will continue to receive the block message directly through the browser and will stop receiving block notification via email.

Microsoft Defender for Office 365

Use the built-in Report button in Outlook: The built-in Report button in Outlook for Mac now support the user reported settings experience to report messages as Phishing, Junk, and Not Junk.

Upcoming Ninja Show episode:

In-depth defense with dual-use scenario: We are joined by Senior Product Manager Manfred Fischer and Cloud Solution Architect Dominik Hoefling to explore the built-in protection mechanisms in Defender for Office 365. Tune into this episode as we dive deep into a dual-use scenario demonstration to learn how customers using third-party email filtering services can still leverage the powerful features and controls of Defender for Office 365.

Bulk Sender Insights in Microsoft Defender for Office 365: In this episode, Senior Product Manager Puneeth Kuthati explains the importance of bulk sender insights within Defender for Office 365. Discover how these insights help differentiate trustworthy bulk senders from potential threats, tackle the challenges of fine-tuning bulk email filters, and strike the right balance to ensure important emails reach your inbox without overwhelming it. By analyzing sender behavior and trends, organizations can strengthen email security, reduce unwanted bulk traffic, and minimize false positives.

Visit the Show page to add those episodes to your calendar: Virtual Ninja Training

Microsoft Defender for Identity

Defender for Identity: the critical role of identities in automatic attack disruption. Read this blog post to learn about automatic attack disruption and how important it is to include Defender for Identity in your security strategy.

Microsoft Security Blog

Microsoft is named a Leader in the 2024 Gartner® Magic Quadrant™ for Endpoint Protection Platforms. We are excited to announce that Gartner has named Microsoft a Leader in the 2024 Gartner® Magic Quadrant™ for Endpoint Protection Platforms for the fifth consecutive time.

Storm-0501: Ransomware attacks expanding to hybrid cloud environments

Microsoft has observed the threat actor tracked as Storm-0501 launching a multi-staged attack where they compromised hybrid cloud environments and performed lateral movement from on-premises to cloud environment, leading to data exfiltration, credential theft, tampering, persistent backdoor access, and ransomware deployment.

Securing our future: September 2024 progress update on Microsoft’s Secure Future Initiative (SFI).

In November 2023, we introduced the Secure Future Initiative (SFI) to advance cybersecurity protection for Microsoft, our customers, and the industry. Since the initiative began, we’ve dedicated the equivalent of 34,000 full-time engineers to SFI—making it the largest cybersecurity engineering effort in history. And now, we’re sharing key updates and milestones from the first SFI Progress Report.

Microsoft Tech Community – Latest Blogs –Read More

Does Polyspace Server license Queue if all are in use?

I Wanted to understand if Jenkins jobs Queue on Polyspace server if all are in use?I Wanted to understand if Jenkins jobs Queue on Polyspace server if all are in use? I Wanted to understand if Jenkins jobs Queue on Polyspace server if all are in use? license, polyspace, polyspaceserver, server MATLAB Answers — New Questions

The size of nested cells

I have a cell array. Each cell contains an n by m cell. How can I get "m"?I have a cell array. Each cell contains an n by m cell. How can I get "m"? I have a cell array. Each cell contains an n by m cell. How can I get "m"? cell array MATLAB Answers — New Questions

Unknown Error when initialising Matlab Runtime

I built a python package using Library Compiler in Matlab2019b. When I tried to initialise the Matlab Runtime after I imported the package, an unknown SystemError occurred.I built a python package using Library Compiler in Matlab2019b. When I tried to initialise the Matlab Runtime after I imported the package, an unknown SystemError occurred. I built a python package using Library Compiler in Matlab2019b. When I tried to initialise the Matlab Runtime after I imported the package, an unknown SystemError occurred. matlab compiler, python MATLAB Answers — New Questions

Plot Fourier Transform (FFT) Interpolation Model into future

Hi i’m gonna open another discussion hoping more people will join it and someone will find the solution. I have a set of data which have been interpolated through Fourier Transform to build a function f(x)=A*cos(2*pi*F*x+P). All the coefficients have been succesfully calculated and the function fit perfectly the data. The problem is that when i try to plot the equation to further x value the function tends immediately to a stochastic behaviour (small amplitude-high frequency wave) which it doesn’t make any sense.

In other words after the interpolation period the function collapse immediately into its noise and strong amplitudes disappear.

Does anyone can solve this problem?

I attach the model.

Thanks for you all in advanceHi i’m gonna open another discussion hoping more people will join it and someone will find the solution. I have a set of data which have been interpolated through Fourier Transform to build a function f(x)=A*cos(2*pi*F*x+P). All the coefficients have been succesfully calculated and the function fit perfectly the data. The problem is that when i try to plot the equation to further x value the function tends immediately to a stochastic behaviour (small amplitude-high frequency wave) which it doesn’t make any sense.

In other words after the interpolation period the function collapse immediately into its noise and strong amplitudes disappear.

Does anyone can solve this problem?

I attach the model.

Thanks for you all in advance Hi i’m gonna open another discussion hoping more people will join it and someone will find the solution. I have a set of data which have been interpolated through Fourier Transform to build a function f(x)=A*cos(2*pi*F*x+P). All the coefficients have been succesfully calculated and the function fit perfectly the data. The problem is that when i try to plot the equation to further x value the function tends immediately to a stochastic behaviour (small amplitude-high frequency wave) which it doesn’t make any sense.

In other words after the interpolation period the function collapse immediately into its noise and strong amplitudes disappear.

Does anyone can solve this problem?

I attach the model.

Thanks for you all in advance fft, fourier, model, interpolation, amplitude, noise, function MATLAB Answers — New Questions

Could anyone explain MBD in software development process V-shape model?

Hi all,

I undertooth that in V-shapre model, MBD includes 3 steps ( software detail design, coding, unit test).

But MBD has validate and verification as SIL, PIL, HIL. Does it insteads for Unit test, Intergration test and system test?Hi all,

I undertooth that in V-shapre model, MBD includes 3 steps ( software detail design, coding, unit test).

But MBD has validate and verification as SIL, PIL, HIL. Does it insteads for Unit test, Intergration test and system test? Hi all,

I undertooth that in V-shapre model, MBD includes 3 steps ( software detail design, coding, unit test).

But MBD has validate and verification as SIL, PIL, HIL. Does it insteads for Unit test, Intergration test and system test? mbd, v-shape model MATLAB Answers — New Questions

CA using nested DDL

PS C:WINDOWSsystem32> Get-Recipient -RecipientPreviewFilter ($FTE.RecipientFilter)